Install Data Quality Services

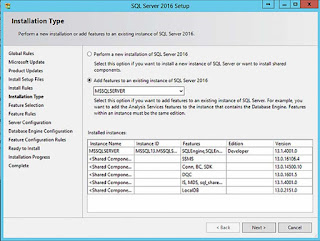

The installation of DQS is a two-step process which consists of running the SQL Server 2016 Setup wizard and a command prompt based application called the Data Quality Server Installer. To start the installation process, locate the SQL Server 2016 installation media and run Setup.exe. This will open the SQL Server 2016 Installation Center. Click on Installation menu option and then click on New SQL Server Stand-Alone Installation Or Add Features To An Existing Installation to launch the SQL Server 2016 Setup wizard. Click Next. On the next window, select the installation type option Perform A New Installation of SQL Server 2016, if SQL Server 2016 Database Engine has not been previously installed. In this case, you will also need to install SQL Server 2016 Database Engine as it is required for Data Quality Services. If there is already an installation of SQL Server 2016 Database Engine and you would like to add DQS to the exi...